The Assessment Knowledge Base is continuously being improved and expanded. Knowledge Base News is updated with new information, trainer-led video content, and more with each release. Learn more about new knowledge base content by reading Knowledge Base News.

Release Timeline:

Oct 6, 2025: Released to training sites

November 2025: Released to production sites

Learn more about release enhancements by registering for the v3.112 Release Webinar! After registration, enrollment automatically continues for all future webinars; no re-registration is required. Please join every release webinar, as scheduling allows.

Data Sets

Multiple data set descriptions have been updated with more precise descriptions to help users understand the organizational ownership requirements for accessing specific assessment data. Learn more about data sets.

|

Data Set |

Change |

|---|---|

|

Assignment Linking Analysis by Course Section |

Renamed to Assignment Linking Analysis by Program. Description updated to: This data set exports a comprehensive CSV summary of program & assessment details, including program metadata, outcomes, Program Assessments, and information about the current status of Assignment Linking, neatly organized by course/co-curricular section. To view the assignment links made for a program, the data set must be created based on the owner, Department, and College associated with those assignment links. Learn more. |

|

Program Assessment Results by Student |

This data set easily exports a complete summary of Program Assessment results for each student. The information is neatly organized in CSV format, and each student's entry in this report is labeled with their University ID, along with any other student attributes that may be provided from the Institution's Student Information System Import File. To view the assessment results via this data set, the data set must be created based on the owner Department and College of the course section assigned to the assessment. Learn more. |

|

Program Assessment Results by Course/Term-Based Co-Curricular Sections |

This data set exports a comprehensive CSV summary of Program Assessment results, neatly organized by course/Term-Based Co-Curricular Section. To view the assessment results via this data set, the data set must be created based on the owner Department and College of the course section assigned to the assessment. Learn more. |

|

Program Management Analysis |

This data set exports a comprehensive CSV summary of program & assessment details, including program metadata, outcomes, Program Assessments, and information about the current status of assignment linking, neatly organized by program curriculum mapping. To view the assignment links made for a program, the data set must be created based on the owner, Department, and College associated with those assignment links. Learn more. |

Data Sets - Juried Assessment

The Assessor Scoring Progress and Assessor Scores data sets have been enhanced to display the term information for a Juried Assessment. Now, when provided the term_code and term_name columns will display associated term information. This enhancement improves accuracy and consistency of exports and downstream reporting. Learn more about the Assessor Scoring Progress or the Assessor Scores data sets.

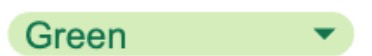

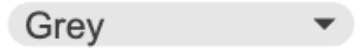

Evidence Versioning

The Evidence Bank has been enhanced with new functionality for version tracking and visibility across evidence artifacts. Once evidence has been revised, version numbers will display in the Evidence Bank and the self study Related Documentation page when viewing evidence details, and the Related Documentation page can be filtered to quickly locate items requiring attention. Platform action items and notification emails will alert users and provide guidance to quickly take action when evidence edits occur. The Evidence Usage Audit report now includes versions, sections, and self study details to support traceability and auditing.

Now, indicators and banners will display on the Related Documentation page when an action is required, allowing Self Study Managers to accept or decline changes originating from the Evidence Bank. Together, these updates reduce manual rework and assist Institutions in knowing what changed and where, and strengthen compliance by providing comprehensive audit trails between the Evidence Bank and self studies. Learn more about the Evidence Bank.

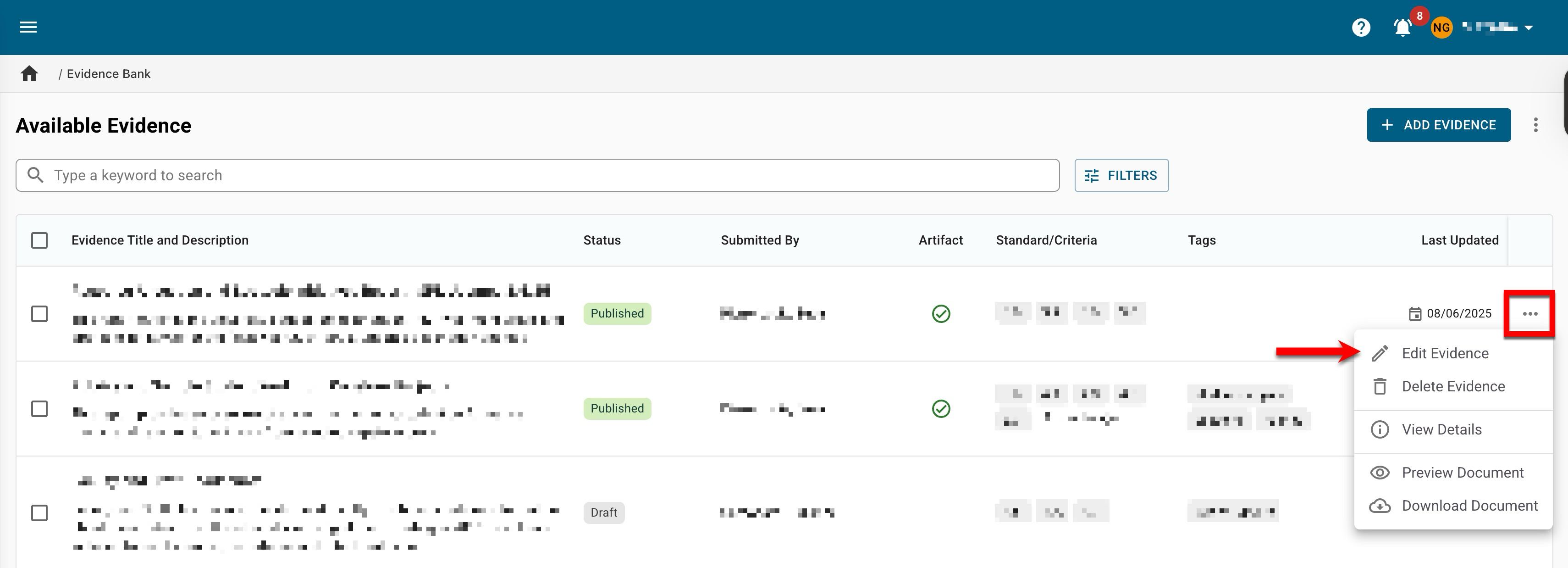

Editing Evidence

Editing evidence no longer results in an In Revision status. When a piece of evidence is edited, it will now continue to display as published evidence and will remain in Published status. Learn more about the Evidence Bank.

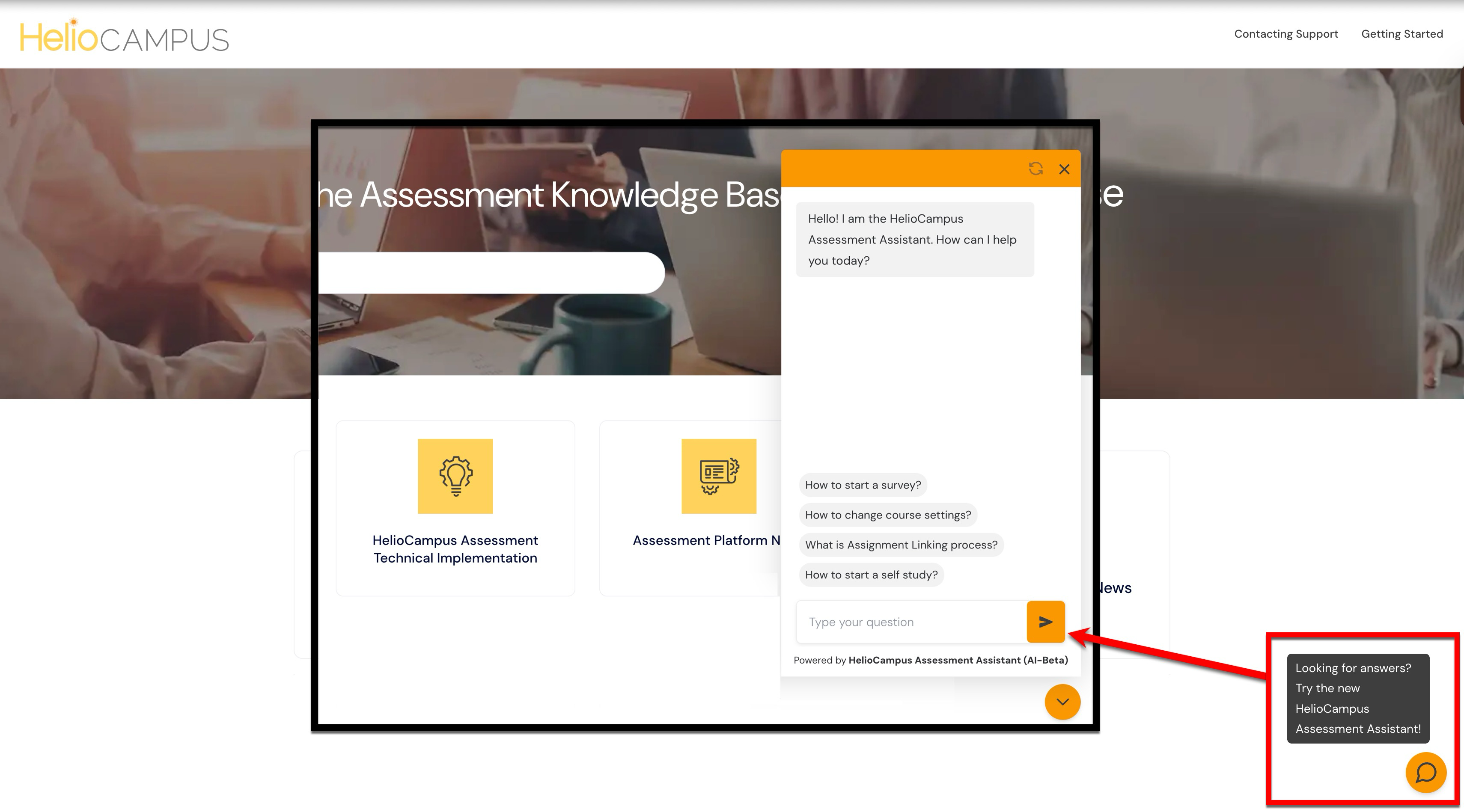

HelioCampus AI Assistant

Get answers faster with the new HelioCampus AI Assistant powered by the Assessment Knowledge Base. Ask questions about assessments, credentialing processes, platform usage, and more. The AI Assistant will guide readers to the right articles and steps.

-

Get quick answers from multiple help articles in one place with step-by-step walkthroughs

-

Article links open directly to the related resource in a new tab

This assistant is designed for common “how do I…” questions and platform navigation assistance; assessment setup and scheduling, credentialing workflows, configuration help, best practices, and more ensure that the HelioCampus AI Assistant provides seamless and functional help. Learn more.

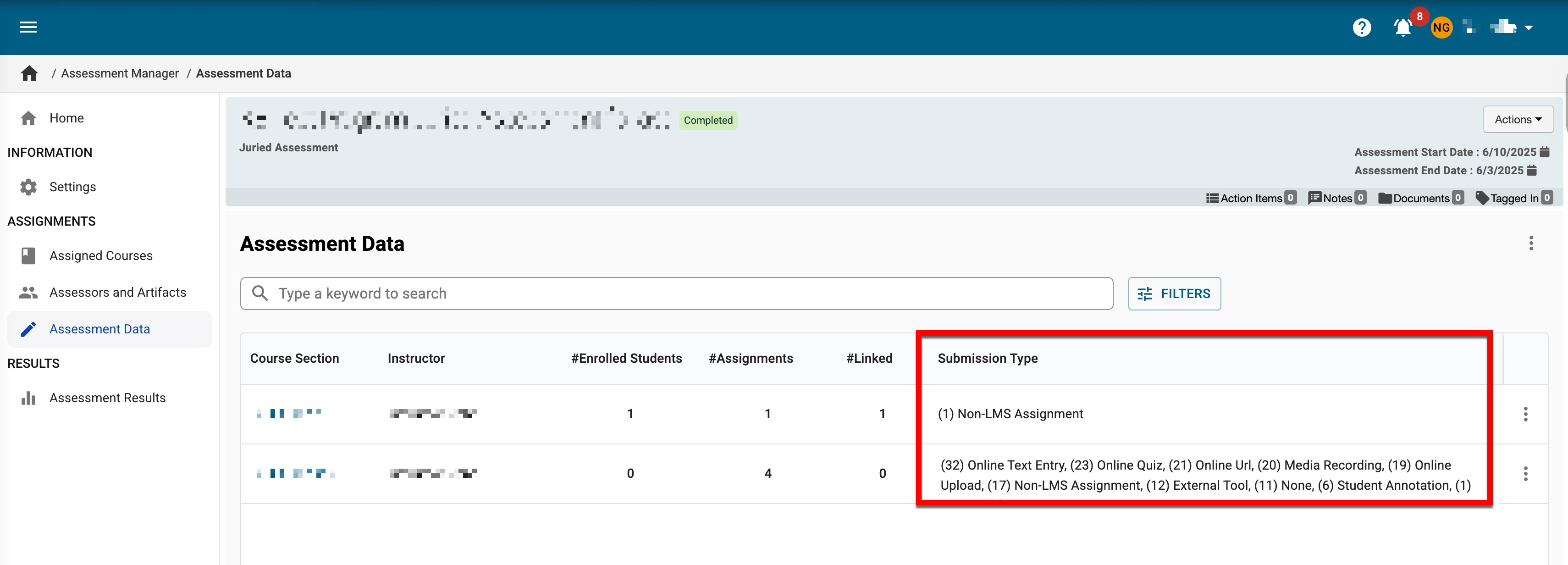

Juried Assessment Submission Types

A new column has been added to the Assessment Data page for a Juried Assessment. This new column displays the assignment submission type and the count of submissions. This enhancement provides coordinators with insight into the type of submissions and the ability to troubleshoot submission issues. Learn more about Juried Assessment and the Assessment Data page.

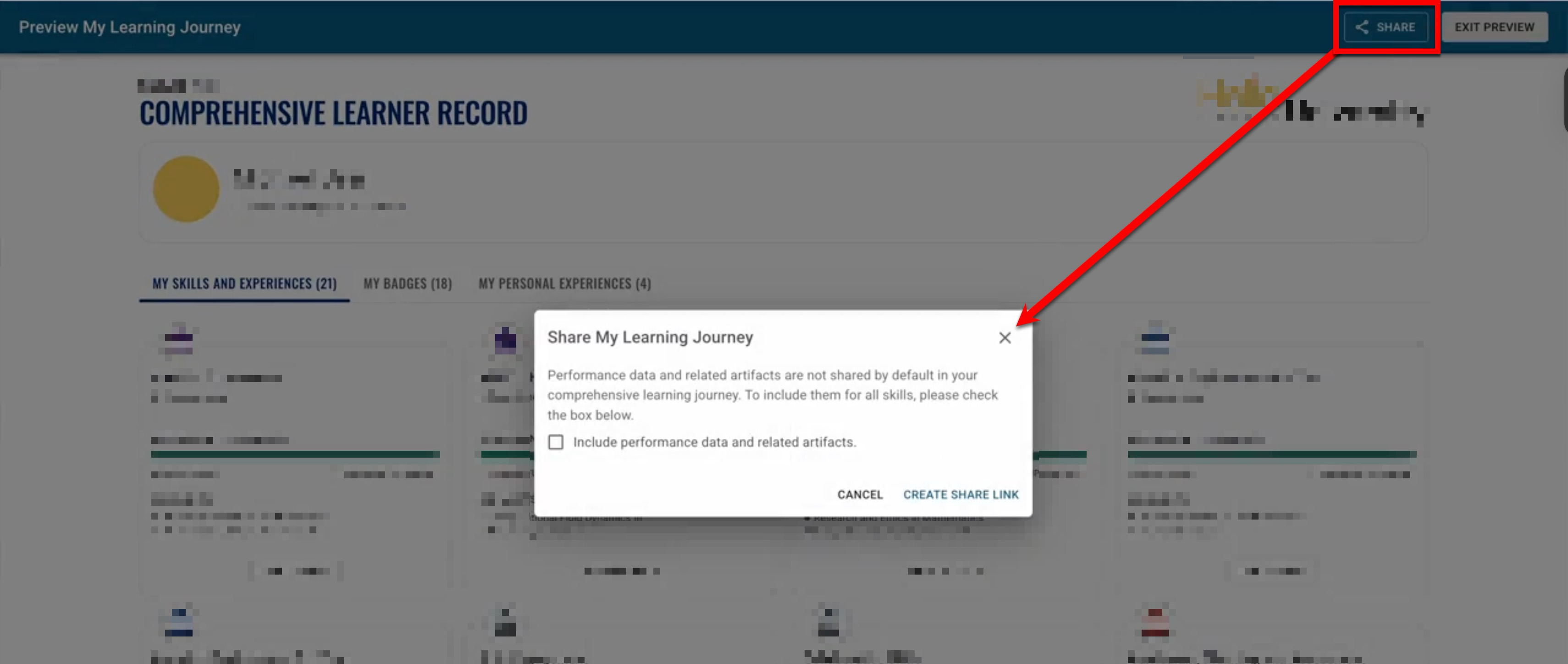

Sharing a Comprehensive Learning Journey

Students can now share a URL of their comprehensive learning journey, with an option to include performance data. This enhancement makes it easier to showcase progress to advisors, employers, and reviewers. Learn more about sharing a learning journey.

Learning Journey Experiences

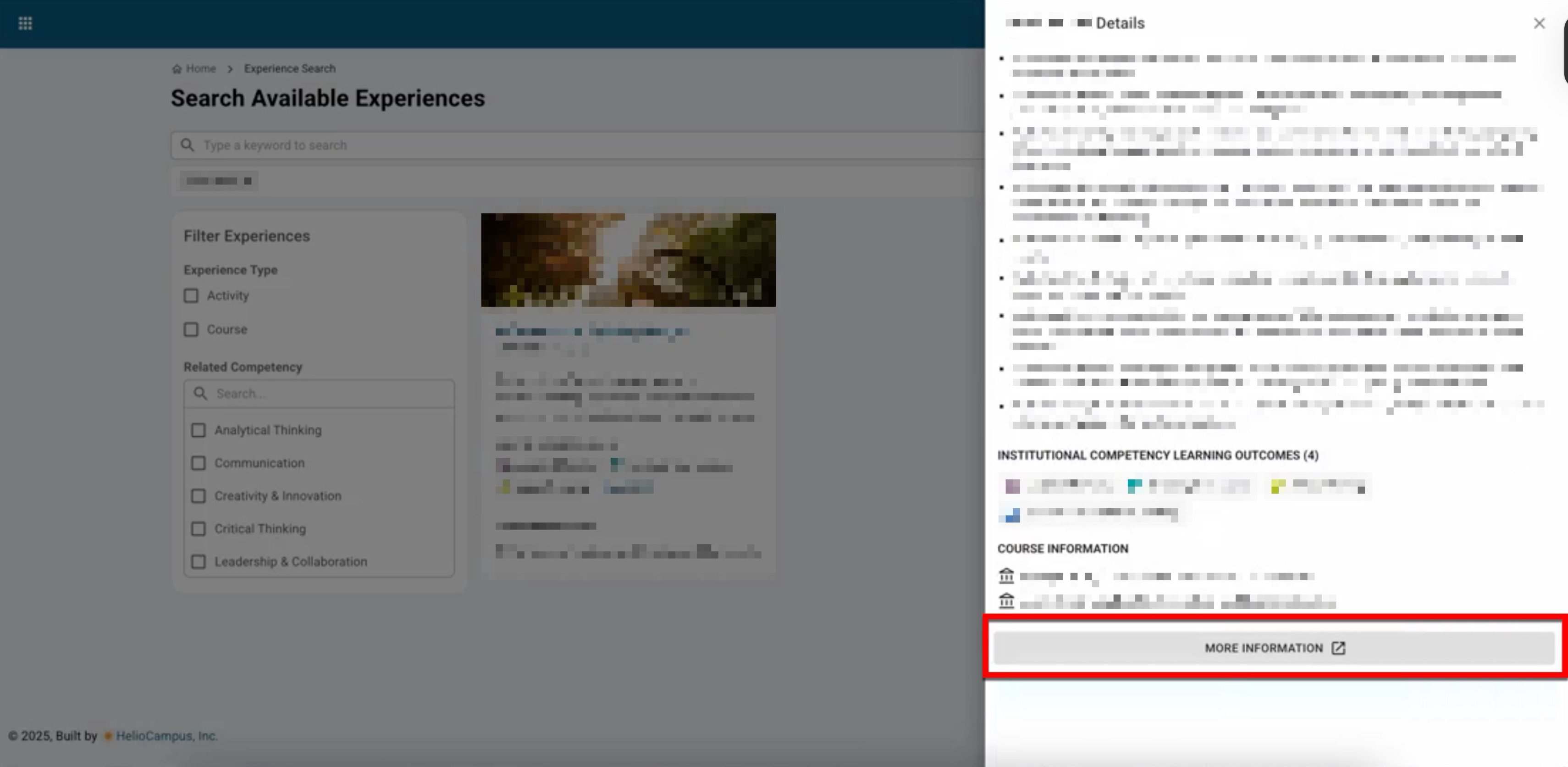

The Learning Journey Experience Details drawer has been enhanced to include a new button for viewing additional experience information. When Institutions provide a URL description in their data files, the More Information button will open the provided URL in a new tab. This enhancement provides valuable context to learners and advisors, reducing the need to navigate away from the platform page. Learn more about searching for experiences.

Learning Journey Skills

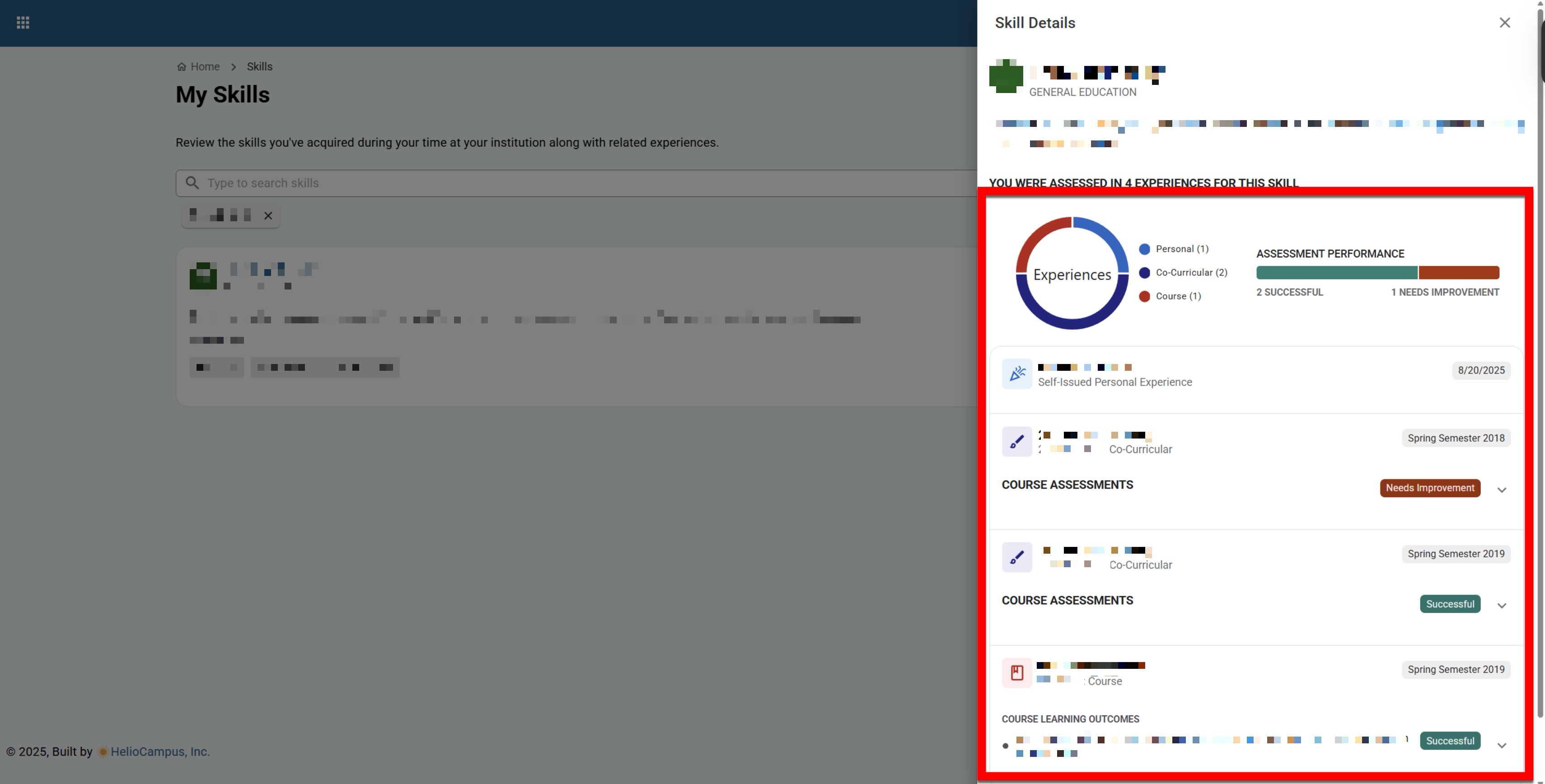

When viewing skills, the Skill Details drawer now includes performance visualizations showing successful and unsuccessful completions across related experiences. This improvement provides quick, at‑a‑glance insight into outcome achievement to guide advising and planning. Learn more about Skills.

Legacy User Guides

Legacy user guides are now available in the Assessment Knowledge Base. These articles were created for a previous version of the platform and may contain screenshots or instructions that differ from the current platform interface. While HelioCampus works to update these articles with the latest features and improvements, they continue to be a valuable resource for understanding core functionality and processes. Learn more about these legacy user guides.

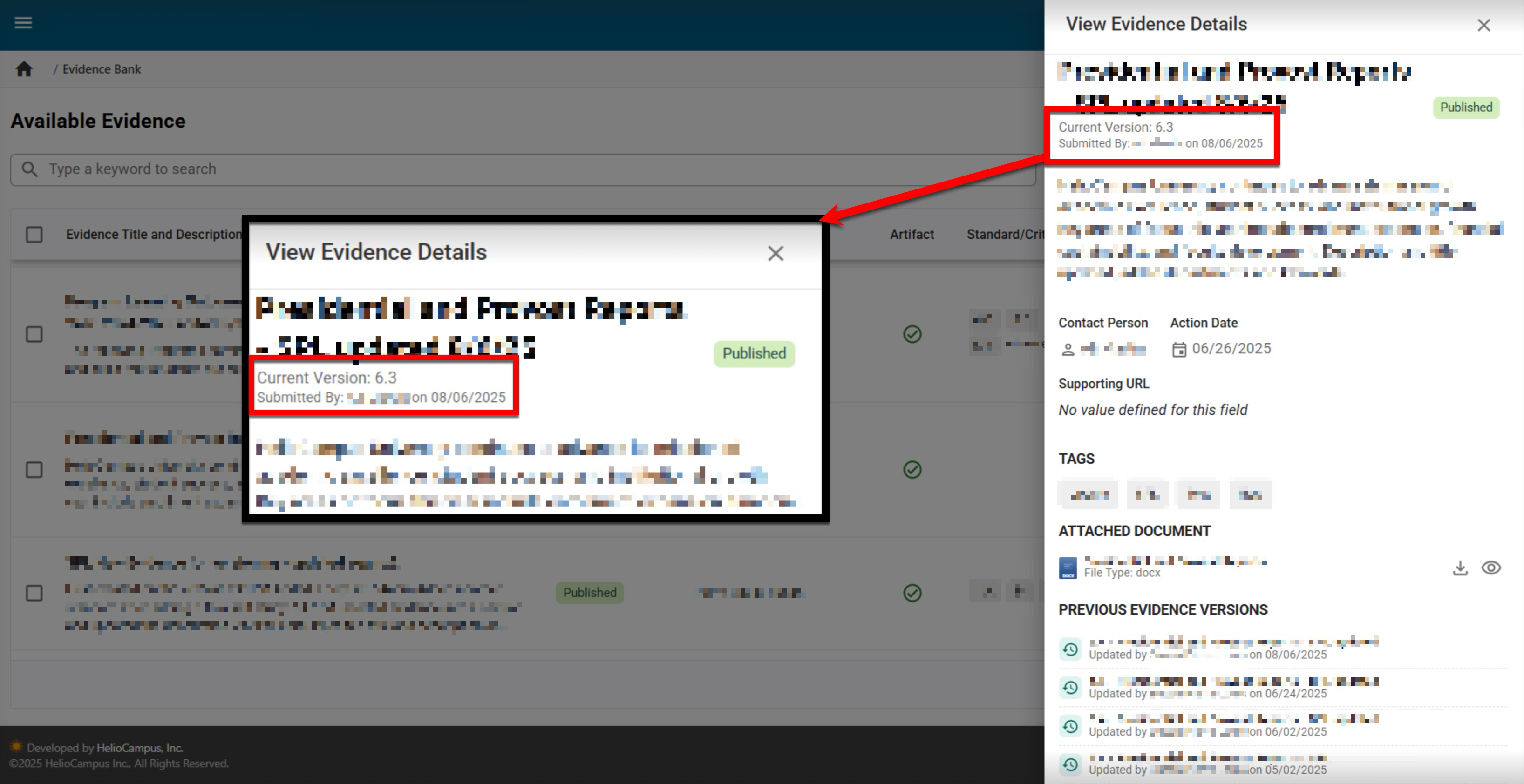

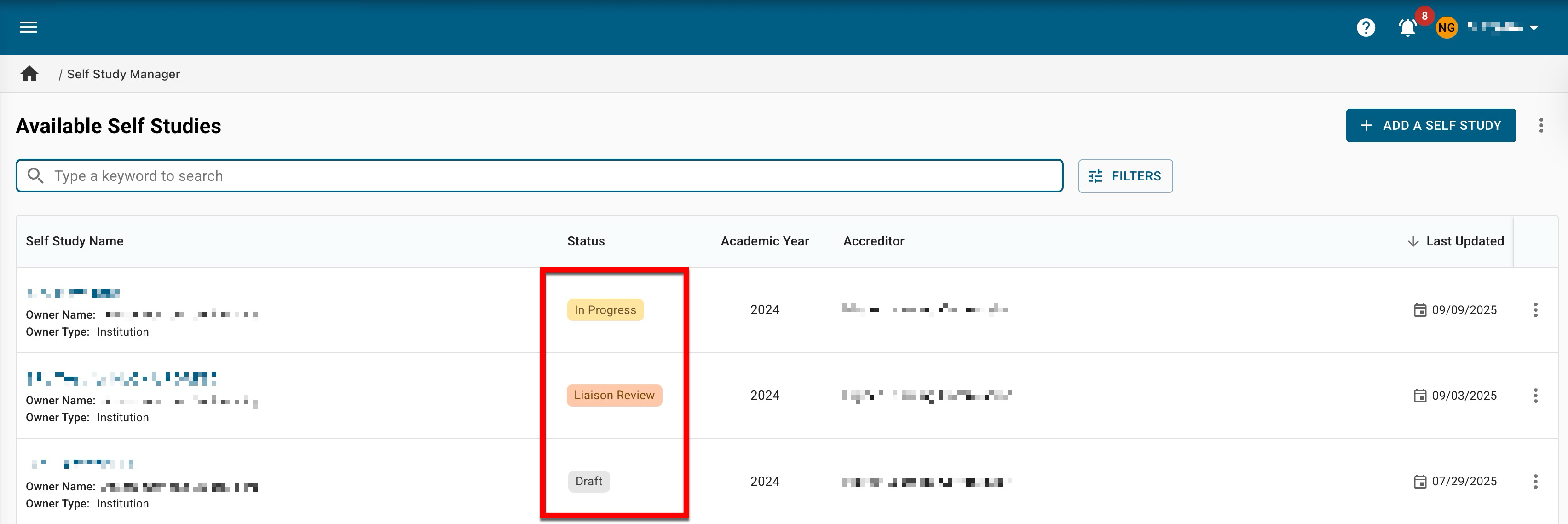

Platform

Status colors across the platform have been standardized to improve clarity and accessibility. A consistent palette now highlights only the most important statuses, uses a common color for less critical states, and meets contrast guidelines. This is a visual-only update that affects all platform pages displaying statuses. Learn more about platform status colors.

|

Status Color |

Details |

|---|---|

|

|

An item is active, or a process is currently running smoothly. |

|

|

An item is blocked or is causing a critical issue. |

|

|

An item requires immediate action. |

|

|

An item requires action, but is of lower priority. |

|

|

An item is completed or has a successful outcome. |

|

|

An item is in a neutral state and does not require action. |

Programs

New revision functionality is now available for programs in Published status. Now, some program settings relating to assessment, notifications, and Program Learning Outcomes (PLOs) can be edited without needing to revise and create a new version. This enhancement affects fields and configuration points on the following program pages:

-

Details

-

Settings

-

Student Outcomes

|

Editing |

Edits are allowed in published programs. They are retroactive and will be applied to all historical versions of a program. In general, edits should be reserved for minor, non-substantive changes to a program. |

|

Revising |

Revisions create a new version of the program. Revisions are not retroactive, and historical versions of the program will be retained. Create a new version of the program when making substantive changes to outcomes, program structure, or curriculum. |

Learn more:

|

Details |

|

By editing a program:

|

|

Settings |

|

By editing a program:

Product Tip If the Proficiency Scale and/or Course Revision Notification for Program Coordinator email notification has been locked at a level of the Organizational Hierarchy, neither can be edited at the program level. Learn more about locking organizational settings. |

|

Student Outcomes |

|

By editing a program:

|

A new email notification is available via the Program Settings pages at all levels of the Organizational Hierarchy. The new Program Import File Revision Notification notifies Program Coordinators that a program has been revised via the automatic nightly import file. By default, this new email notification is disabled. Learn more about configuring and using email notifications.

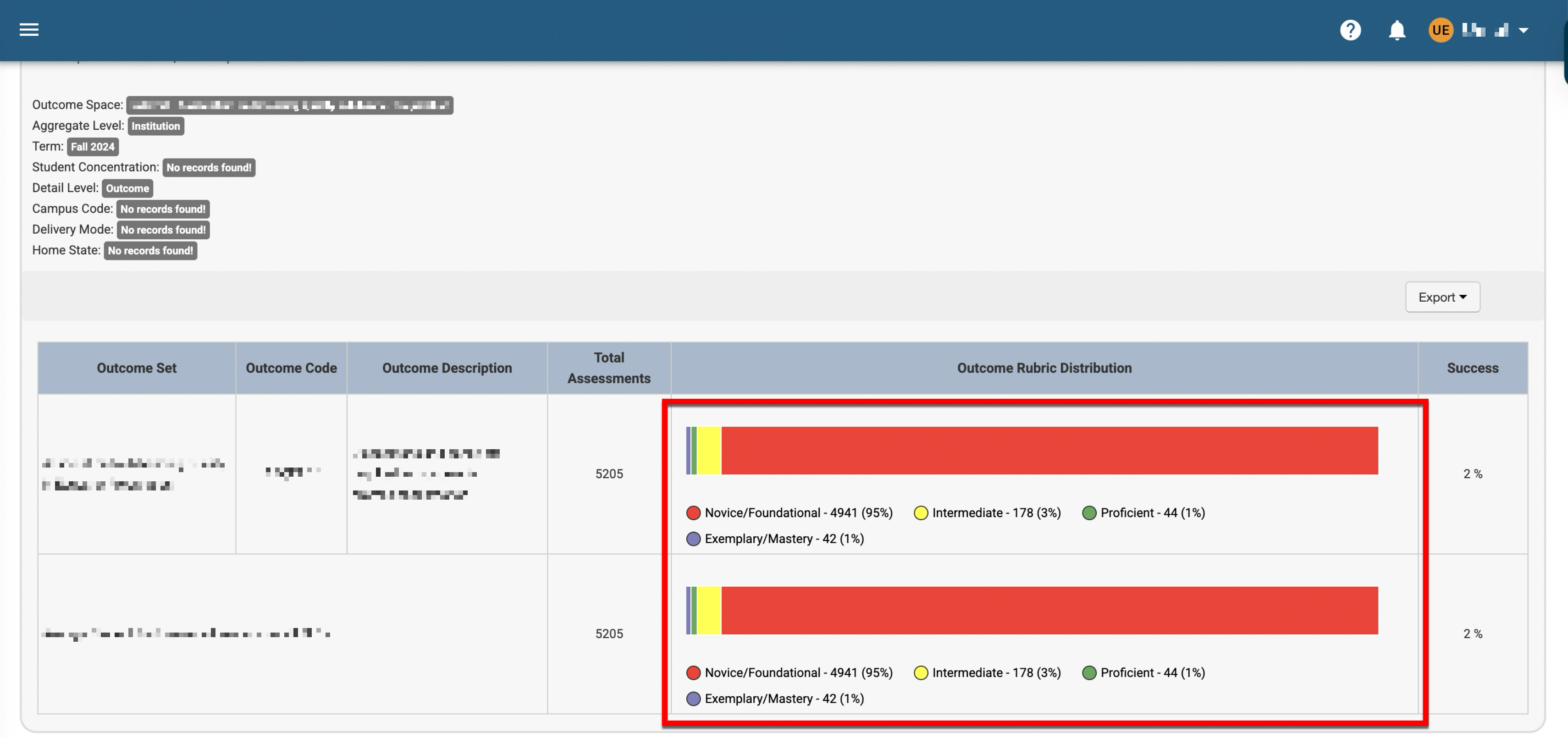

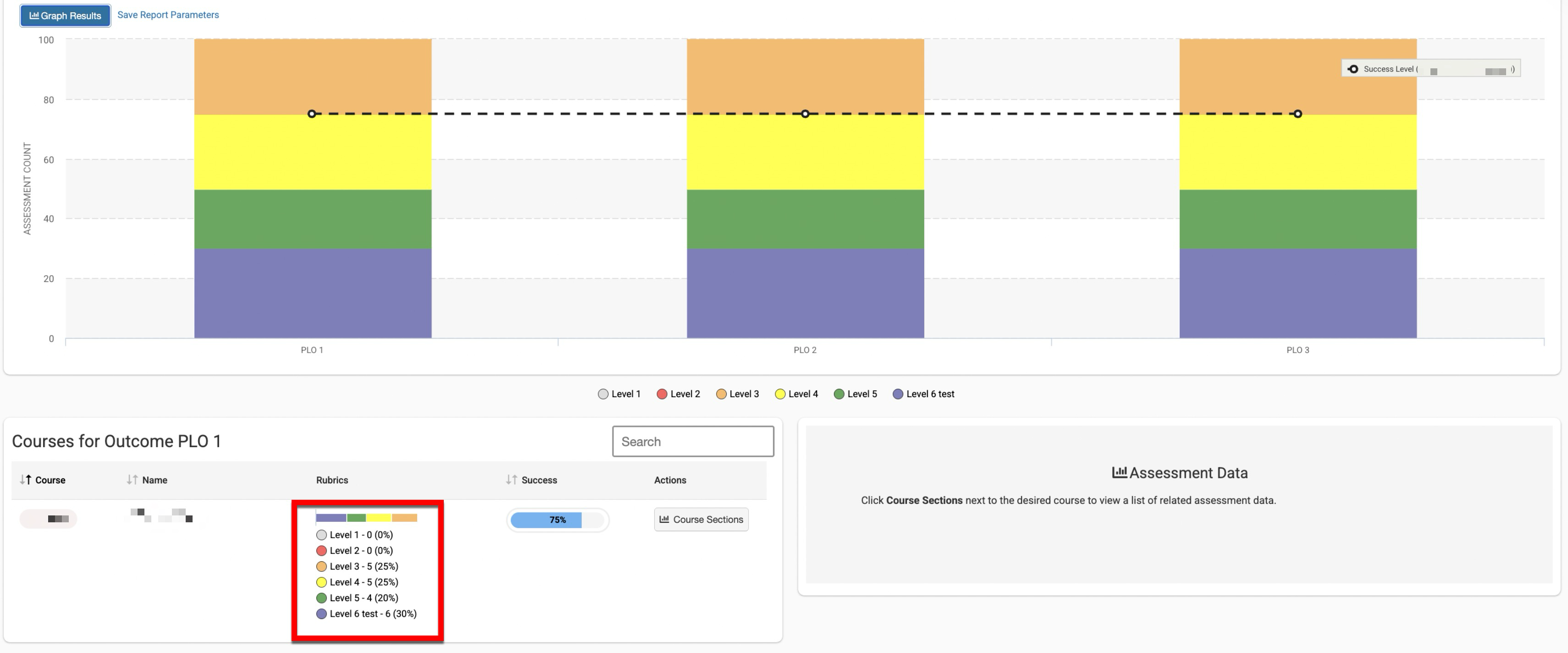

Report Legends

Legends are now displayed beneath charts with color dots and are visible in exports. This enhancement improves readability and accessibility without relying on hover tooltips. This enhancement has been implemented for the following reports:

-

Direct Assessment Graphs

-

Outcome Assessment Summary

Additionally, counts and percentages are now included in consolidated chart legends and removed from bars. This update standardizes legend style across reports and improves interpretability in exports.

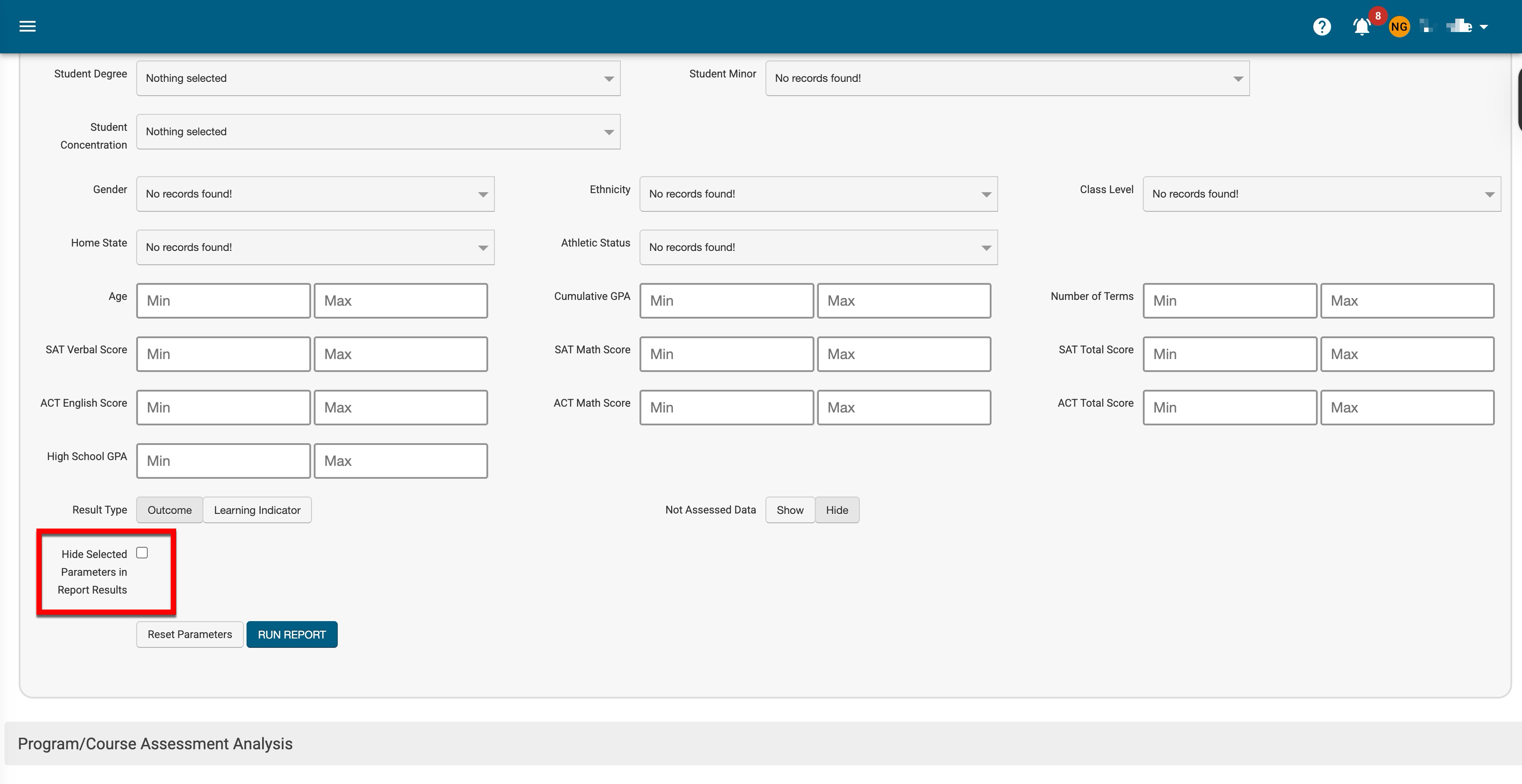

Report Parameters

Enhancements have been implemented to now include standardized and unified parameters and controls for showing or hiding selected parameters in report results. This update pertains to the following reports to improve transparency in shared reports and produce cleaner, more focused exports. Learn more about the Report Library.

Program/Course Assessment Reports:

-

Direct Assessment Graphs

-

Student Outcome Achievement

-

Outcome Assessment Summary

-

Program/Course Assessment Analysis

Assignment Reports:

-

Assignment Details Audit

-

Assignment Scoring Results

-

Assignment Linking Audit

-

Assignment Linking Results

These reports now include a new checkbox that is disabled by default. When enabled, the Hide Parameters in Report Results ensures that parameters configured for the report results are not included in the report output. When disabled, parameters and their selections will be included in the report output. Learn more about the Report Library.

Student Metadata Reporting Parameters

Further enhancements have been made to enable automated support for student metadata parameters used across all assessment reports. When provided to HelioCampus via the Student data file, the student metadata parameters included below can be used to configure student-specific data for all assessment results. This update enables more precise disaggregation and equity analyses without manual steps. Learn more about the Report Library.

-

Age

-

Ethnicity

-

Gender

-

Home State

-

Highschool GPA

-

Cumulative GPA

-

Athletic Status

-

Number of Terms

-

Class Level

-

ACT English Score

-

ACT Math Score

-

ACT Total Score

-

SAT Math Score

-

SAT Verbal Score

-

SAT Total Score

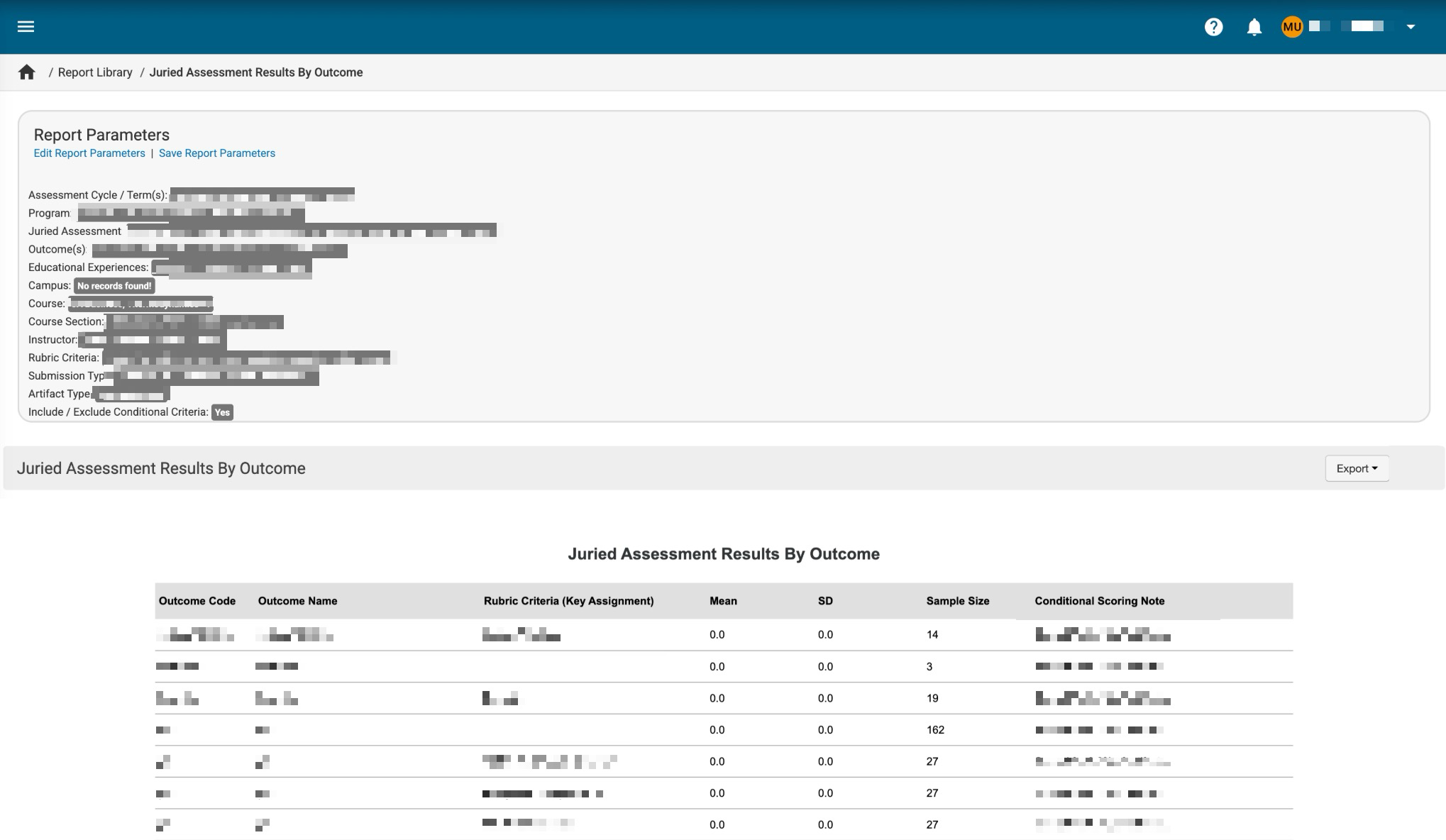

Juried Assessment Results by Outcome Report

A new report is available that aggregates Juried Assessment results by outcome and rubric criterion, including mean scores, standard deviations, and sample sizes. The Juried Assessment Results by Outcome report summarizes student performance for each learning outcome assessed in a selected Juried Assessment. This report aggregates rubric scores from multiple assessors, organized by outcome and mapped criteria, to support program review, accreditation, and institutional effectiveness. Learn more about the new Juried Assessment Results by Outcome report.

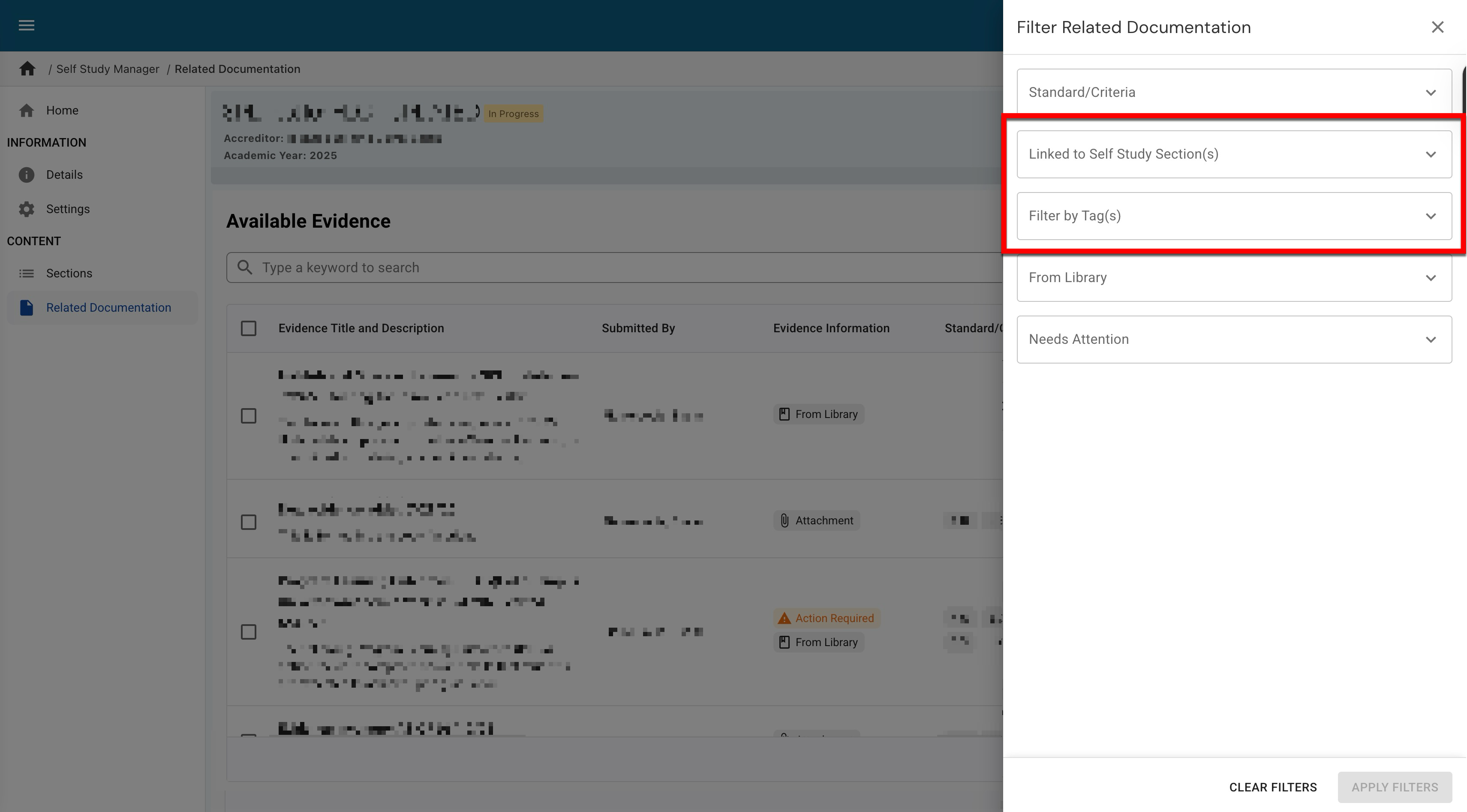

Self Study Evidence

Filter functionality has been enhanced to enable filtering by whether evidence is or isn’t associated with a self study section, or by specific tags. This enhancement improves searching to reduce the time spent searching for evidence. Learn more about the Related Documentation.

Survey Manager

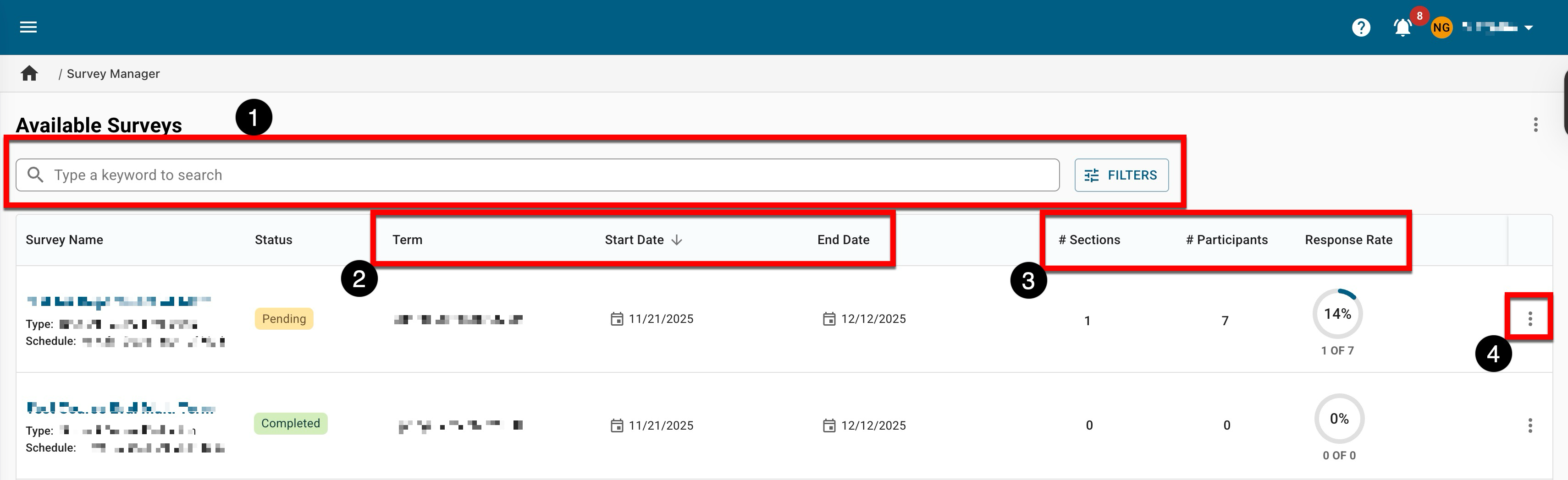

The Survey Manager has been updated with an improved user interface. Search functionality is available, and by clicking the Filters option, specific filters can be applied (1). Term and survey schedule date information is displayed (2) as well as quick-glance survey data (3). By expanding the Action kebab, navigation to the Survey Results page (4). Learn more about the Survey Manager.

Surveys - Partial Terms

Timing for email notifications associated with partial-term surveys has been enhanced to reflect a partial term’s timeline rather than the related parent term timeline.

-

Survey start notifications to Instructors are now sent at each partial term’s faculty notification date.

-

Results available notifications are now sent at each partial term’s results available date.

Notification templates remain editable until all associated dates have passed. This enhancement assists Instructors participating in courses that use partial terms and Administrators coordinating survey communications across staggered schedules. Learn more about surveys and Partial Terms.

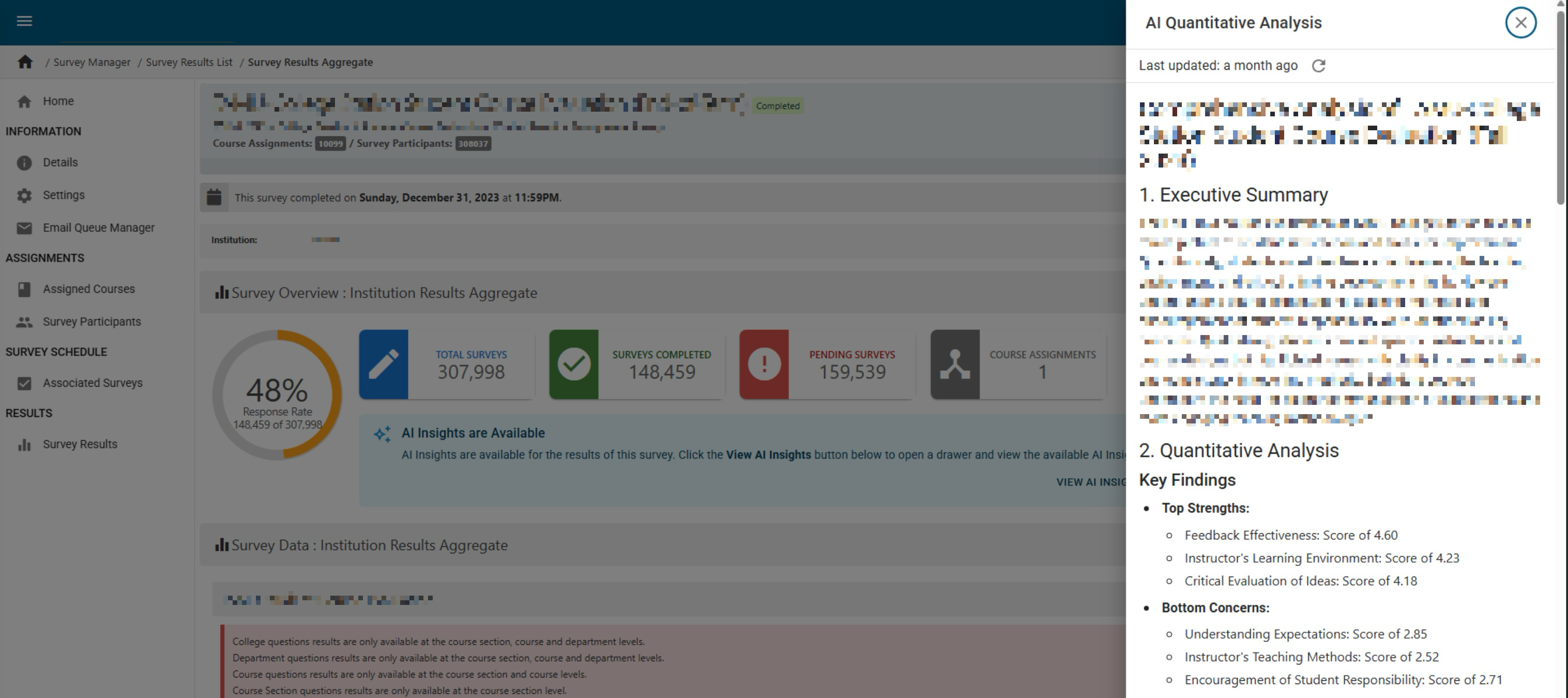

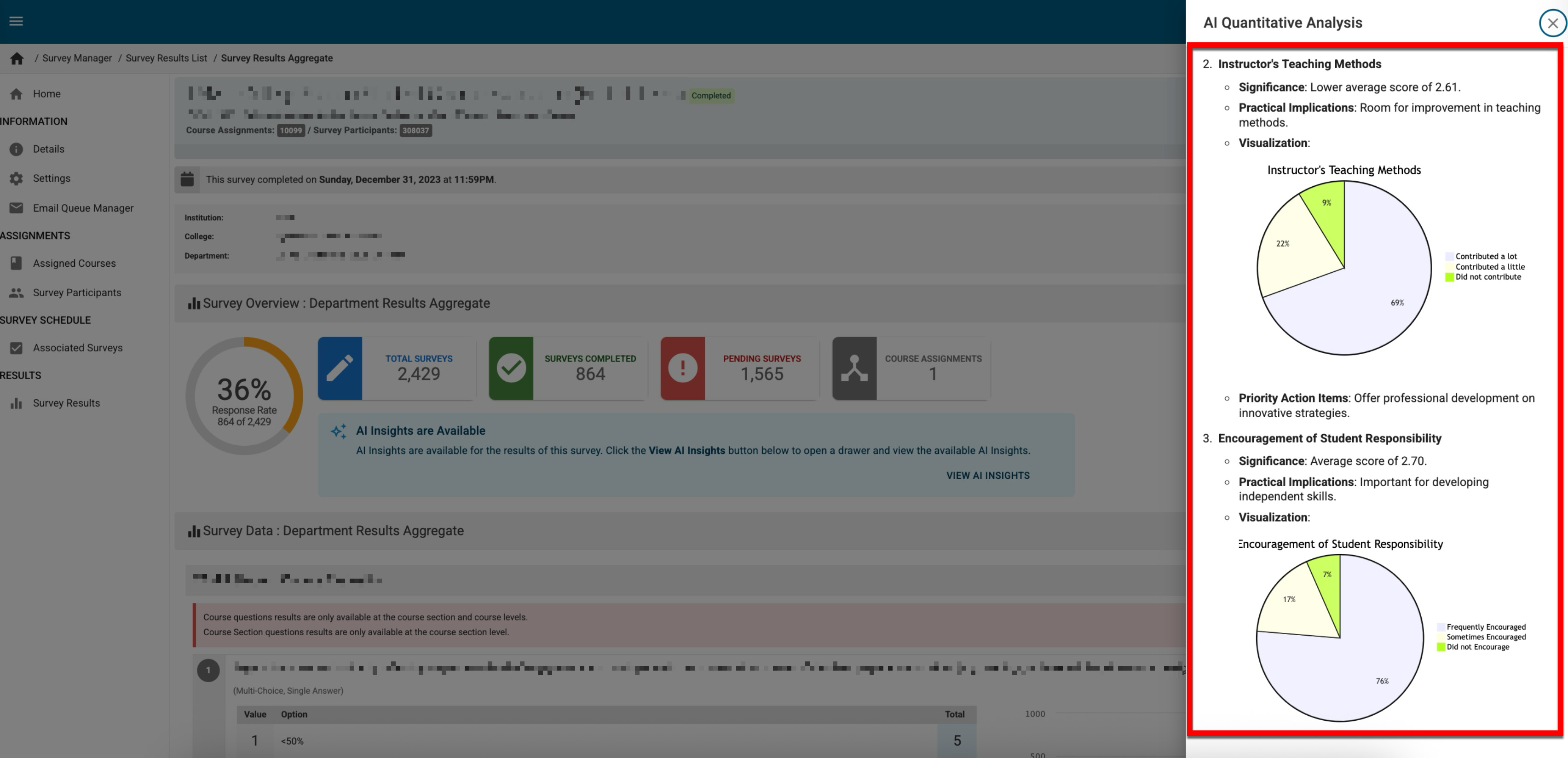

Surveys - Sentiment Analysis AI

Enhancements have been made to add further AI insights information, equipping institutional leadership with actionable, high-level findings without the need for manual data gathering. These enhancements enable AI insights to provide quantitative and qualitative analysis at each level, thereby reducing analysis time and improving consistency.

Quantitative Metrics (Institution-wide): These metrics are sourced from survey questions with multiple-choice answer options.

Qualitative Insights: These insights are sourced from survey questions with open-ended responses

Key Findings:

-

Response Rate Challenge

-

Organizational Concerns

-

Teaching Assistant Supervision Gaps

-

Mixed Learning Outcomes

Learn more about survey question types.

Additionally, Institution leadership who need a fast, high-level read on educational quality can view quick-glance information via new metrics charts. This enhancement displays the sentiment analysis in one place, combining quantitative metrics and qualitative insights to quickly spot trends and problem areas.

-

Review key performance indicators such as response rate, overall satisfaction, and the share of negative sentiment.

-

Track response rates over time. Compare results across the hierarchy associated with a course survey, and view a clear visualization of sentiment distribution.

Learn more about Sentiment Analysis AI for Course Evaluations.

Term Types Manager

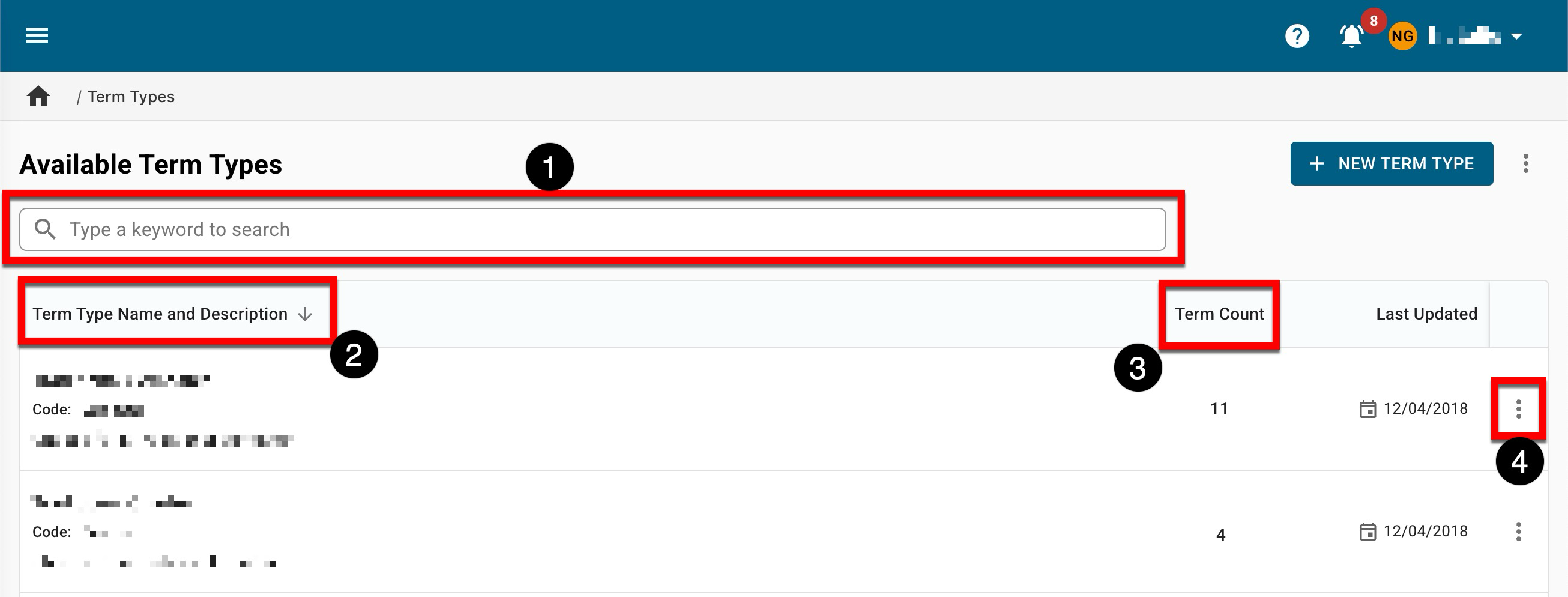

The Term Types Manager has been updated with an improved user interface. Search functionality is available for keyword search (1) to easily locate term types. Term type information is displayed directly below the search bar (2), and the Term Count column displays how many terms are associated with a term type (3). By expanding the Action kebab, term types can be edited and deleted (4). Learn more about Term Types.

User Roles

For Institutions utilizing the platform’s read-only role functionality, the Self Study and Evidence Bank user roles included below have been updated to prevent all read-only roles from exporting or downloading self studies and evidence. Learn more about User Roles.

-

Self Study Section Auditor

-

Self Study Section Approver

-

Self Study Section Editor

-

Self Study Chair

-

Self Study Liaison

-

Self Study Institution Chair

-

Evidence Bank Curator

-

Evidence Bank Contributor